In no particular order, here’s some stuff I saw at SIGGRAPH 2010.

Especially recommended:

- •ADB (Art Gallery)

- •TRON: Legacy (Special Session)

- •Example-based Facial Rigging (Talk)

- •Compositing Avatar (Talk)

Feathers for Mystical Creatures: Creating Feathers for Clash of the Titans

James Meaning

Fur & Related Technology Lead MPC

Requirements

- •Full screen close-up

- •Convincing Shading - match plates of horses and humans

- •Efficient LOD

- •Open and Closing Wings

- •Over 100 shots

- •Multiple Characters in one shot

- •Fit into pipeline

- •Short development time

Furtility at MPC

- •All fur and hair in film and commercials

- •Started for the movie 10,000BC

- •Subsequently used on Wolfman, Harry Potter 6, Prince of Persia, Narnia 2

Feathers at MPC

- •Killer Dinosaurs (cards -- but procedurally created in MR shaders)

- •Griffon in Narnia 2 (cards and extruded planes)

New Tool for Clash of the Titans: Feather Designer

- •Modeled every single barb on the feathers

- •Set up a Base Distribution

- •Then procedurally increase distribution

- •Assign a temporary colour

- •base length, inclination, length noise, curl, base scraggle, and clump fur system

- •Additional curl spine geometries

- •Writes out all of this as a Feather Template

Only had actually 10-12 feather templates but the proceduralism allows them to be highly varied.

To get a more interesting appearance they would bake the simple textures

out and let the texture artists paint a series of textures. Those got re-baked onto

the UV space and mapped back onto the feathers.

They generated curves from their fur system and then built the feathers there.

You could preview a dozen or so in Maya.

For non-hero feathers, you defined the feathers at modeling stage.

It did a bunch of checking to make sure the feathers basically didn't

intersect. Under all the feathers, they put a lower level of fur to cover the skin.

Animation & Dynamics

- •Animators had proxy feathers in their rig.

- •They also deformed the invisible nurbs planes, then mapped the spines of the feathers onto those planes, picking up the orientation & twist from the NURBs plane as well. This guaranteed you don't get any intersections but still avoided getting any stretching.

For hero shots, you could pre-generate the curves and then put extra deformations on top on that (dynamics & turbulence etc.).

Asset Management

All the caches and etc. got rebuilt automatically when a new upstream asset is checked in.

Rendering

- •If you just threw this at PRMan, it didn't render so well…

- •In the RMan procedural, they generate the curves and figure out which template they're going to use. That lets you take the bounding box and bound the memory use pretty accurately.

LOD

- •Had a whole LOD system that could automatically turn down barb count and increase width. That worked great for static LOD.

- •They also built a system where they just randomly removed barbs and increase width that they used when they needed dynamic LOD (it didn't look as good) but most shots were able to use static LOD.

- •They also used the generated feathers (the same ones they use for Maya preview) as the geometry to calculate ambient occlusion. That way they never had to ray-trace against the full curves for each barb.

Curves

They don't use Maya curves, they have their own curve node in Maya which represents all curves in the scene as one Maya node.

Art-Directed Trees in Disney's Tangled

Andrew Selle

A short version of this talk is summarized at

http://portal.acm.org/ft_gateway.cfm?id=1837095&type=pdf&coll=GUIDE&dl=GUIDE&CFID=100627488&CFTOKEN=46591266

Intro

Develop end-to-end tree pipeline

- •modeling and look

- •procedural detail

- •rendering

- •but art-directable

Art-directed trees in the real world: Showed picture of Bonsai

Yet Another Tree System?

Many tree algorithms out there... our focus was:

- •art direction

- •unified art direction

- •pipeline

Trees in Bolt

- •Mostly sparse single trees

- •Slow, difficult to manage

- •Trees were not specially handled

Driven by Tree Initiative: an exercise prior to production where a cross-disciplinary team from design, technical direction, and modeling worked on a possible pathway for developing trees.

Testbed to Production

- •Tree Initiative well-recived

- •Said they wanted it in production in 8 weeks

Tangled Concept Art: Flynn hanging from a cliff tree with a certain shape

First, decided on a Unified Representation of the tree

- •Hierarchy of Splines and parents of the splines

Modeling Cycle

Initial Input

- •Tree Sketch -> Splines

- •Hand model -> Polygons

- •Created tools to convert in either direction btw them

- •Arthur made a tool called TreeSketch that created spline hierarchies interactively in Maya

- •Then made a tool that previewed the bark mesh from that

- •To go from polygons to splines, wrote tool base on Skeletonization paper

Look

- •Developed a system called Dendro - creates procedural detail

- • Creates fine procedural detail

- • small twigs/leaves

- • saves artist time

- • Logistics

- • Renderman procedural for scalability

- • interactive preview

- • Decided to make actual connected twigs

- • Initially tried to get away without connecting branches to canopy

- • But this gap was visible in too many shots

- • Growth model

- • Considered L-systems

- • Unintuitive

- • Instead, use particles to simulate growth

- • Create bud particle, let it meander, branch, instance leaves

- • Dendro Canopy

- • The art directed the canopy shape as well

- • They built a polygonal approximation to that and used it to direct the Dendro model

Texture Synthesis

- •Used exemplar texture synthesis technique

- •Had to created a flow texture along the tree

Rendering

- •Used Precomputed Radiance Transfer (PRT) mixed with subsurface scattering

- • Used one value per leaf

- • Ran PRT on the whole canopy at once

- • Also used Deep Shadows

- • Used low-order PRT (order 3)

- • If you used high-order it might replace Deep Shadows

- • But you might have to recompute it when it moves

- • Used real leaf normals for rendering

- •Could bake this data at asset commit time

- •The actual lighting was super-fast, could even do real-time preview

- •LOD

- • Used Brickmaps

- • Eventually reduces tree to a single disk

- • Also Used Stochastic Pruning

- • Drop leaves, make leaves bigger

- • Reduced rendering time and memory

- • The Brickmaps produced a bigger speedup

- • But there were issues with getting the textures to match

Programmable Line-drawing for 3D scenes

University of Grenoble

Goal: shading language for line drawings a la RenderMan Shading Language

Input: 3D Scene

From this, compute (all algorithms from prior work):

Silhouette

Contour

Suggestive Outlines

From these, build a 2D view map

Take that and the style description (in a Python-like language) and render the scene

The style description is Pythonic:

class myShader(StrokeShader):

def shade(self,stroke):

…

You can also select portions of the view map to apply shaders to using predicates

Next, you can provide an iterator that changes the ordering & topology of strokes

Finally, there's a sort operator that enables area-based simplification

Future Work

Temporal Coherence: there isn't any now, so you can't make animations

Shading language for regions

Software is open source and is being integrated into Blender

http://freeeestyleintegration.wordpress.com/

Advances in Real-time Rendering Session

Sample Distribution Shadow Maps (Intel)

Also tried k-means clustering

Adaptive logarithmic

These require a depth histogram

Implemented two ways

Reduce up on depth buffer (ccan be done on DX 9/10)

0.9 msec on nVidia 480

Full implementation with histogram

7 msec

Performance Left 4 Dead 2 720p

~13 msec/frame

Adaptive Volumetric Shadow Maps (Intel)

Fundamentally you want to compute the visibility curve showing the fraction of light which the is passing through the material at a given depth.

Deep Shadow Maps:

Capture visibility curve and compress

Easy to implement but too slow for real time

Opacity Shadow Maps:

Slice space at regular intervals

Has aliased artifacts

Depth Range Dependent

Fourier Opacity Mapping:

Express visibility functions as a fourier series

Get ringing artifacts

Depth Range Dependent

AVSM:

Use a fixed number of nods to store the visibility curves

(at each texel in the shadow map)

When a new light blocker is added:

Multiply the two curves together

Simplify the curve back down by deleting the points that matter the least

Designed to be parallel but GPUs don't have atomic read-modify-write

Therefore you end up with race conditions

Conclusion: Robust easy-to-use real-time volumetric shadows

Few tweaking times

Unfortunately lack of atomic read-modify-write means fast implementation requires unbounded memory

Uncharted 2 Character Lighting & Shading

John Habel

CNaught

Uncharted 2 was one of the biggest games of the year last year, famous for its integration of character and story into widely varied gameplay. John was the Character Shading Lead for Uncharted 2.

Todo list for this talk:

- •Skin

- •Hair

- •Cloth

- •+ 5 Secrets

Skin

- •d'Eon et al (nVidia Human Head Demo) is the best real-time skin

- •Divides the incoming light up into light (mostly cyan) that does not scatter and light (mostly reddish) that does

- •They render the light in UV space then blur it 5 times

- •Combine this with color filtering to get the result

- •This tends to turn normals that point towards the light a little cyanish and normals that point away a little reddish

In Uncharted 2, we needed something faster (our game is in ShaderX7).

You can think of the real nVidia technique as a 100-tap filter created during those 5 blur steps. One simplification was to approximate the 100-tap filter with a 12-tap filter; that’s faster, but it wasn’t fast enough for all of our situations.

Hacky 1-pass Approach: Bent Normals

- •Pretend the R, G, and B normals are different from the normal map.

- •R points more to the geometric normal

- •G & B point more at the light

- •Looks better than diffuse but creates a blue patch at grazing angle

Blended Normals:

- •Do calculation twice,

- • Once with near-geometry normals

- • Again with near-light normals

- •Take a color-weighted blend

Uncharted 2: We use 12-tap combined for cutscenes and Blended Normals in-game

Hair

We basically used the technique from Thorsten Scheuermann (Kajiya-Kay spec)

http://developer.amd.com/media/gpu_assets/Scheuermann-HairRendering-Sketch-SIG04.pdf

- •diffuse wraparound

- •No self-shadow

- •Desaturated Diffuse map as Specular Map

Cloth

- •A lot of people say cloth doesn’t have specular.

- • Use a polarizer and look -- it does so

- •It also has a fresnel term

- •In Uncharted 2:

- • Rim lobe

- • An Inner lobe that points at ???

- • Plus some diffuse

- • Not based on light direction (!)

Five Secrets

Secret #1: Avoid the Hacks as Long as Possible

- •Hacks don’t work!

- •Look great in stills

- •Not when you move lights/camera

- •Photoshop tricks only work in stills

Secret #2: Avoid wraparound light models

- •A few exceptions (Hair)

- •OK for ambient

- •Harsh falloff is your friend

- •If you want skin to look softer, change your shading model, not your lighting

Secret #3: Avoid blurry texture maps on faces

- •Put detail into both the diffuse and specular maps

- •Soften it with SSS, not in the maps

- •It’s supposed to look terrible in Maya!

Secret #4: Don’t Bake Too Much Lighting into Diffuse Maps

- •If your diffuse maps have near-full luminance they become unlightable

- •Keep the AO as a separate map - it’s OK if it’s low-res

Secret #5: Make Sure your AO and Diffuse Match

- •We screwed this up

- •Don’t play Telephone adjusting one Character at a Time

Conclusion

- •Use Custom Shading Models

- •Linear-space Lighting -- You Gotta Do It

- •Even if you tried and rejected it a few years ago, try again

Kelman-Zure-Kalsz is a good model for specular modulation if you can afford it.

- •Uncharted 2 did a Blinn-Phong approximation instead

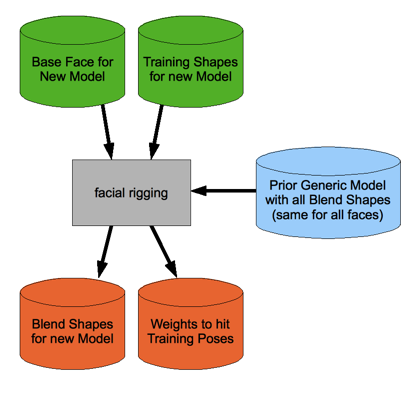

Example-based Facial Rigging

ETH Zurich

Existing Approaches

Joint-based

Physical simulation-based

Blend shapes

Cartoony character: 30-70 blendshapes

Photorealistic character: up to 1000

Expression Retargeting: posts blend shapes from one base shape to another

Papers: Expression Cloning, Deformation Transfer

Rigging by Example: The Goal

Basic Method

Compute a set of blend shapes with fixed weights

Compute a set of weights with those fixed blend shapes

Iterate until it converges

Used Deformation Transfer to compute the blend shapes

(mentioned something about computing in gradient space to get a better smooth in the result)

Facial Action Coding System – see previous work from Filmakademie

The paper is online at http://people.agg.ethz.ch/~hli/Hao_Li/Hao_Li_-_publications_[Example-Based_Facial_Rigging].html.

Interactive Generation of Human Animation with Deformable Motion Models

Texas A & M University

I didn’t bother writing up the talk; it is yet another system for generating bad animation quickly.

Compositing Avatar

WETA Digital

Scale of the show:

- •2500 shots

- •50,000 rendered elements

- •stereo

Started in Late 2006

- •No stereo compositing packages were available on the market

- •Tried comping left and right separately with different compositing graphs

- •It was a file management nightmare

Developed the Stereo EXR format

- •Multiple views in one file

- •Channel naming convention

- •Non-stereo-aware software loads the “hero eye”

- •Wanted to foment industry adoption

- •Now part of OpenEXR 1.7 (implemented in Nuke, RV, etc.)

Developed stereo version of Shake

- •Added SEXR-ware operators

Stereo playback: RV

Silhouette: Stereo-aware rot/paint tool

Ocula: stereo-aware compositing nodes

Deep Compositing

- •How to comp volumetric, soft elements?

- •Z compositing gives crappy comps

- •Therefore, you render with holdouts

- •But this is slow & painful – especially with 50,000 rendered elements!

- •So they ultimately rendered without holdouts and computed holdouts in the comp

Deep Alpha Maps

- •Each pixel is a list of depths and alpha values

- •They are produced by the deep shadow support in the renderer

- •Using this data we can compute holdout mattes for an element when we need them

Built a UI for automatically generating the Shake file

Did all DOF defocus in comp by using the deep alpha maps

- •The edge never got crunchy – unlike Z-based depth blur

Volumetrics and Deep Shadows

- •Can't afford to store per voxel layer

- •So, output layers only when a significant sample occurred

WETA Water

WETA Digital

Used in-house viscous solver

Greatly enhanced the level-set compositers

Wrote GPU-based visualizers for level sets, vector fields, scalar fields, etc.

- •Enabled directing the simulation in-progress

- •Meshing was too slow to wait for

The crashing-wave shot emitted hundreds of millions of particles

- •Implemented a GPU-based fluid solver to do the air simulation in this shot

The system for compositing level sets, etc. was called Deluge and was implemented as a series of Maya plug-ins

Prep & Landing Snow Effects Process

Disney

Prep and Landing was a TV Special produced at Walt Disney Feature Animation

They had 4 people to do 150 snow shots in 4 months

Ultimately did everything with sprites, instances, volumes, and displacements

Wanted to avoid per-shot simulations

Variety of snow types, low of snow interactions including fur, hair, etc.

Techniques:

- •Automate snow setup on shot build

- •R & D creating snow types

- •40 snow types caches to disk

- •In each shot, the shot artist placed the pre-simulated snow types

- •Were mostly successful at avoiding per-shot simulations

Emerging Technologies / Art Show / The Studio

ADB (the Robotic Ferret) (Art Gallery)

Nicholas Stedman and Kerry Sega

This awesome picture is by Doran Barons http://www.flickr.com/photos/dopey/

This was the coolest thing at the show (and it has nothing to do with computer graphics). The ADB is a robotic creature built out of about 10 organically-shaped segments printed on a 3D printer. Each segment has a microprocessor, contact sensors on the sides, a little bit of battery power and two rings that connect it to the adjacent segments. The rings have a servomotor in them so each segment can turn relative to the adjacent ones, and the rings aren’t parallel, so the creature can curl up.

The programming of the creature is that when you touch up, it curls up towards the place where it’s being touched -- in other words, it’s attracted to touch. You can easily overpower the servos, so you can always reconfigure it if it gets too snarled up. Additionally, it sometimes does spontaneous movements as well, which all creates a real sensation that it’s alive when you’re holding it.

A lot of people I describe this to think it sound kind of creepy, but actually it’s a great experience. Unfortunately, it doesn’t translate well to pictures or verbal description -- it’s really something you have to hold in your arms to understand (in that sense, it’s a perfect interactive experience -- you can’t understand it from the web).

ADB seems like it should be from Japan, but in fact it was made by two artists from Toronto. They were really nice, after experiencing ADB I stayed and talked to them for quite awhile. Another thing I noticed funnily enough about this exhibit was the concentration of current and former Pixarians at it; just before me Rick Sayre was there and my friends Bena and Tristan came up right after me.

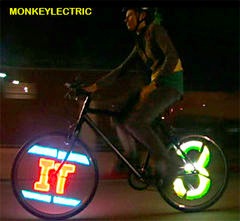

Monkey Lectric (The Studio)

http://www.MonkeyLectric.com/

I’ve actually met some of the folks from Monkey Lectric before, I almost ordered one last year and still plan on getting one eventually. Basically, they figured out that if you mount a strip with a bunch of LEDs along a bicycle spoke and ride the bike, you have a potential display in radial coordinates. If you put three such strips around the bike wheel and ride at a reasonably speed, persistence of vision will give you a full disk of display surface (two seconds looking at the web site will get the point across faster than my prose). It’s a great idea, and to make the point of how general-purpose it is, this year they had a bunch of paper which circles printed on it -- you grabbed magic markers, colored your scene on the paper, handed it back to them, and a few seconds later your image was on the rotating bicycle wheel looking out over the room. Totally fun and best of all you can order one right now for your bike!

beacon 2+: Networked Socio-Musical Interaction (Emerging Technologies)

Takahiro Kamatani, Toshiaki Uchiyama, Kenji Suzuki, University of Tsukuba

http://www.ai.iit.tsukuba.ac.jp/images/project-beacon.jpg

This was my favorite eTech installation this year. It’s basically a low 3’ high cylinder that projects a laser line on the floor. It also has a pretty accurate sensor though, so it knows if you’re stepping on the line or not. The line rotates around the cylinder and by stepping on it or not you affect the music that’s being generated by a network of a few of these. It was really fun to interact with, I spent about five minutes dancing around the thing. Unfortunately, I couldn’t really see where the networked aspect added anything -- if you want to make something emergent happen with a network of these I think you’d need to have them within eyesight of each other.

Tools for Improved Social Interaction (Art Gallery)

Lauren McCarthy UCLA

Winner of the funniest piece at the show, these very postmodern ironic takes on just how interactive we want our garments to be definitely made me laugh. Unlike ADB, you can get the gist of these items from the above website quite nicely!

RayModeler (Emerging Technologies)

Sony Corporation

Sony has built a fully realized prototype of a 3D display. It’s a small cylinder about 6” across and 8” high inside which spinning LEDs create a full-3D luminous volume display that requires no glasses or other attachments, is visible from 360 degrees, and has pretty good brightness. They didn’t mention prices or anything at this point, but it’s a neat and very well-packaged device even if the resolution was a little low. They had a number of information display demo loops, but the funnest thing was an implementation of Breakout that ran on the surface of the cylinder (all the way around). Because it was really 360-degrees, to play the game you had to keep physically walking around the device, which of course I though was awesome!

Slow Display (Emerging Technologies)

MIT Media Lab/Delft University of Technology/Keio University

http://www.slowdisplay.org/

This was a neat idea: they use a monostable light-reactive material to create a high-resolution display. The material actually changes in response to light so that it is perfectly visible either in the dark or in bight sunlight; furthermore, because the effect persists for a small number of seconds, a display can be sustained with a very lower amount of power (a high-resolution display might only require 2w). However, because the persistence is so long, it can’t display animation or other fast-changing images. The question they pose is basically what applications this is good for -- but it’s a viable and cheap technology.

hanahanahana (“Flower Flower Flower” in Japanese) (Art Gallery)

Yasuaki Kakehi Keio University

One of the few scent-powered installations, by placing a perfume-ridden piece of paper onto a network of wall-mounted projecting “flowers” you determined their growth patterns.

The Lightness of your Touch (Art Gallery)

Henry Kaufman

This was a simulated torso that reacted (slightly) to your touch, and also where your palmprint left behind an afterimage.

Camera-less Smart Laser Projector (Emerging Technologies)

University of Tokyo http://www.k2.t.u-tokyo.ac.jp/

The idea of this augmented-reality research project was to combine a laser projector and a time-of-flight sensor into a single unit. Thus, it can both project information onto a surface (such as a hand or an array of objects on a table) and sense color and depth information from them. The idea seems really neat but none of the live demos were that compelling.

Colorful Touch Palette (Emerging Technologies)

Keio University, University of Tokyo

http://tachilab.org/modules/projects/CTP.html

This was a little thing you clipped onto the end of your finger. It contained a spatial sensor and electrodes that stimulated your fingertips to provide a tactile display based on the luminence or other properties of the rear-projected image you were moving your fingers across. It seemed to be very hard to calibrate -- they had to turn it up all the way in order to get it to work with my fingers. Frankly, while the idea is fine, it’s not something I’d be willing to actually do; the sensation was not pleasant and the spatial resolution of the information was too low to be a meaningful source of input. It was a valid effort to try and build a tactile display, though.

Shaboned Display (Emerging Technologies)

Keio University http://www.xlab.sfc.keio.ac.jp/

OK, I teach at Keio but I’m still dumbfounded by this. They built a grid which can inflates soap bubbles at each point on the grid. The claim is that the existence and size of the soap bubbles constitute some sort of display. Except that um, soap bubbles are kind of hard to see... and they pop... But it was definitely a hunk of messy equipment!

Touch Light through the Leaves: A Tactile Display for Light and Shadow (Emerging Technologies)

Kunihiro Nishimura, University of Tokyo

http://www.cyber.t.u-tokyo.ac.jp/

This was a small cylinder you held in your hand, and it produced vibratory sensations (aka a “tactile display”) on the bottom based on the pattern of light and shadow projected on the top (they set up the installation with patterns that were like leaf dapples). It did just that, not much more depth to it though.

In the Line of Sight (Art Gallery)

Daniel Sauter

This installation was impressive to look at (100 stand-mounted, computer-controlled flashlights) but neither I nor anyone I talked to ever was able to understand what it did or how it did or didn’t relate to the viewer. There’s a description in the catalog, but it doesn’t correspond to anything I was able to observe in the gallery.

Strata-Caster (Art Gallery)

Joseph Farbrook Worcester Polytechnic Institute

This was a wheelchair-controlled POV tour through a Second Life environment of imagery that seemed like it was intended to be disturbing (i.e., babies you can’t easily avoid running over). It seemed to be designed to produce some kind of emotion I didn’t experience.

Collada BOF / WebGL

Collada is a file format for 3D graphic data interchange which has been around for quite a few years. The last pipeline I built at Electronic Arts used Collada as its input format, which is a fairly typical use of Collada (Collada is also well-known as the format inside SketchUp 3D, Google’s easy-to-use 3D application used to create the 3D models for Google Earth). Although we don’t have a lot of use for Collada at Polygon (see the entry on Alembic for something coming up that may be more applicable to 3D animation), I always check in on the Collada BOF to see what’s new in that world.

Although none of the applications of Collada presented this year were particularly compelling to me, I did learn about WebGL, which I think is a big deal. WebGL is basically a JavaScript binding for OpenGL ES 2.0, a mobile-device-oriented version of the OpenGL spec.

This makes sense if you look at modern web development, where (for better or for worse) JavaScript is now the implementation language for code that runs in the browser. Thus, the promise of WebGL is that once WebGL-enabled browsers are out, 3D applications can also be written in JavaScript. Since the OpenGL itself is implemented in compiled code there’s very reasonable hope that the apps can he high-performance (I’ve written 3D applications in Python using PyOpenGL which convinced me it’s pretty true: these days a dynamic language for the application is fine as long as the graphics package underneath is fast). It could finally be practical to implement real, highly-performant games in the browser.

None of that would mean anything if there weren’t likely to be implementations. However, Mozilla started the WebGL project and has a Firefox implementation, and Google Chrome, Apple WebKit, and Nokia on some of their smartphones have all implemented WebGL (as usual with new web technologies, Microsoft is the holdout).

The other interesting thing I saw at the BOF was a couple of guys Rightware (http://www.rightware.com/) in Finland demonstrated a really neat development environment they created around this. Basically it is a development tool for creating slick user interfaces for mobile devices using 3D. More and more, GUIs for mobile devices are becoming highly interactive and using sophisticated graphics. In fact, developing the interfaces for some devices makes lots of sense, and their tool allows you to interactively develop the UI in the tool (taking Collada media as input), and then eventually kicks out the code to implement your UI as compiled code for ARM (the processor inside most phones, iPhone and Android included) that expects only to have a OpenGL ES implementation on the target device.

Rightware is a spinout (of Futuremark) and venture-backed, so they have a lot of pressure to produce. The market may be too small for a venture-backed company to succeed in, but it seems like they fulfill a real need for UI development for mobile devices.

Computer Animation Festival / Electronic Theater

I thought this year’s Electronic Theater was a big step up from the terrible show last year, and in fact I thought it was just fine. However, I’ve rarely heard as divergent opinions: a number of people agreed with me it was fine, but another group of friends strongly felt it was too long and boring. I don’t really have an explanation for that (of course, it goes without saying it wasn’t quite as good as the Electronic Theater we managed to put on last year at SIGGRAPH Asia 2009, sorry Isaac ;-).

Here are a few pieces I particularly liked from the ET.

Loom (Best of Show)

No arguments with the Best of Show award this year. Ilija and a couple of collaborators from Filmakademie made an unbelievable piece about moth and spiderwebs with their typical flair.

The Making of Nuit Blanche

Nuit Blanche is a amazing Canadian film noir done in incredible slo-mo of a man locking eyes with a woman through a restaurant window and the resulting events. Black and white, beautiful and beautifully realized.

Dog Fish

Hysterical Argentinian commercial about a man and his faithful dog fish.

Live Real-Time Demos

The one part of last year’s Computer Animation Festival that was awesome were the live real-time demos conducted before the show -- that’s where I got to see Flower, still one of the most amazing achievements ever in videogames. Frankly, there was nothing that earthshaking this year but the session was still really good. The standout for me was the NVidia Real-time Particle-based Liquid Simulation on the GPU demo with a couple million particles driving a fluid simulation in real-time. The demo was also available all week to play with upstairs; unfortunately, it was the one stand upstairs that was busy all the time.

The Making of TRON: Legacy

For me, one of the highlights of the show was this panel discussion with the Producer, Director, VFX Supervisor, and Animation Supervisor of this winter’s TRON: Legacy feature. In particular, they showed 7 minutes of the final film in glorious stereo! Nothing about the presentation was reassuring in terms of the story of the film, but the aesthetics of the film are going to be gorgeous! And, it is *definitely* a film you should see in 3D. Here are a few tidbits I remember from the presentation:

- •Joseph Kosinski, the Director, has never directed a feature film before; he’s a commercial and game cinematic director.

- •Kosinski was insistent that the world of Tron be a mainframe not connected to the internet; he wanted to stay in the original world, not have “the Facebook army” and “attack of the Twitters” (that was one of the funniest remarks of the panel).

- •For the original TRON way back when, the way they created the glow effect for the suits was to shoot all the live action in black-and-white with the part where they wanted to glow painted white. When they developed that film, they had clear film where the white was and black elsewhere; they then shot the film under an animation camera and backlit the film, producing the glow.

- •For this TRON, Kosinski wanted to often use the glowing suits as the main light source in the scene, so they actually fabricated latex suits with electroluminescent panels for all the actors. The suits each had a battery back in the small of the pack powering the EL panels; the batteries didn’t last very long so the call on-set was “Lights, Cameras, Suits, Action!” All the other lights on the set were dimmed down so that the EL suits were the brightest light.

- •Kosinski does like CG, but even so wanted to do as much of the work practically as possible. Almost all the major sets were built physically, although CG set extensions were of course needed.

- •Jeff Bridges did TRON immediately after Crazy Heart, as someone pointed out these are about as diametrically opposed as two movies could be. A questioner asked if he had any problems, and the Producer and Director both said, “Of course not, he’s the ultimate professional.”

- •In the new movie, the riders on lightcycles are actually on the outside of the vehicle. This was the original design from Moebius for the first movie, but it was impossible to realize in MAGI Synthavision software, so they changed to the enclosed design seen in the first movie.

- •Syd Mead and Moebius sat in adjacent cubicles in the production design department of the first movie (talk about your dream design department...)

- •The new movie has 18 months of post-production.

- •As was reported, Pixar’s brain trust was called in to watch a rough cut of the movie and gives notes before the pickup shoot scheduled in June. Everyone said it was great to get feedback from other filmmakers... not sure if I believed them or not.

- •The movie was shot with two Sony cameras on side-by-side mounts. However, the mount and the cameras are just big and heavy enough that the Digital Domain VFX Supervisor talked about all the problems they had keeping the stereo convergence working. If he did the rest of the movie as well as the 7 minutes we see, I’d say he licked the problem pretty well.

Exhibition

Sohonet

Sohonet is a networking infrastructure and connectivity company that provides high-bandwidth remote video access and preview services for clients. In particular, they sponsored the SIGGRAPH Computer Animation Festival this year by supporting all submission to be uploaded directly to Sohonet’s servers, and the jurying to be done by real-time viewing off of those servers, all at HD resolution. They are definitely one of the leading companies providing this service; unfortunately, I don’t know much about their price structure.

Aspera

Aspera, which occupies a slightly different part of the storage solution, was there as well. Basically, Aspera has an FTP server and client which implement a non-TCP-based version of file transfer which they claim will get 3x of more bandwidth from the same underlying network connectivity. So while, Sohonet is trying to let you preview everything over the net in real-time, Aspera just tried to optimize your non-real-time FTP-style transfers. I’ve used Aspera before and it works well enough, but it doesn’t impact your workflow much because really it’s just another implementation of secure FTP. However, at the show I learned they have an API so it might be possible to implement much higher levels of workflow integration.

Microscanners

Several companies were showing very low-cost, low-overhead desktop 3D scanners. For instance, pico scan ltd. showed their namesake product, which attaches a small projector to a Canon EOS 1000D to produce a desktop scanner that can hit 0.1mm accuracy for E1999 (www.picoscan.eu). There were 3 or 4 solutions like this on the floor that can capture shape and color simultaneously.

At Polygon, it doesn’t come up that often that we need to make a model of something that physically exists, but for those companies that do (e.g., for commercials) it seems like these desktop scanners could really speed things up.

Storage

For the first time I can remember, all of the major storage vendors were at SIGGRAPH. Isilong had a booth, as did EMC.

Blue Arc (one of the storage vendors that focuses quite a bit on our industry) was there and in particular, one of their success stories was Ilion Animation Studios in Spain, one of Ruth’s former employers and a company with much lower budgets than the Pixars or DreamWorks’ of the world. Ilion made the feature film Planet 51 (which I hadn’t known), which is the project that forced them to bring in a BlueArc solution. They had 200 artists, 300 physical render nodes and ran Nuke, Maya, and Max as part of the production. It provided fully os-independent NFS and CIFS service. Interestingly, they only got up to 90TB of storage total.

I chatted with the Isilon representative as well. Of the two people at the booth, one said they had a great show and one said they had a terrible show (?). I didn’t know that Isilon has a lower-end storage line (the NL series) in addition to their mid-range line that we use (the IQ X-series).

CityEngine

This company spun out of cutting-edge research a few years ago continues to make stunning cities via their hybrid of GIS import, piece assembly, and procedural city modeling. It’s important to keep them in mind: if the city sequence in your piece is important enough, this would be a great tool. Like Massive, it probably would take some time to master.